Floating above the world of computing—and the investors who love it—flies the notion that quantum physics will soon change everything. Quantum computers will design us new drugs, new batteries, and more. And then they’ll break standard encryption protocols, leaking our credit card data before we can buy any of these wonders.

You can’t swing a simultaneously dead and alive cat without hitting another claim that we are this close to harnessing the magical quantum computing bit—the qubit—that physicist Richard Feynman first challenged computer scientists to deliver nearly 45 years ago.

The problem is that the physics needed for his “machine of a different kind” that takes advantage of quantum physics’ tantalizing weirdness requires really hard physics. Whether Microsoft or Amazon or anyone else gushing in press releases has achieved this, however, remains nebulous.

On supporting science journalism

If you’re enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

For now, it seems, your encrypted data is safe(ish). “Seems like it’s always five years away,” as one financial industry observer told the Wall Street Journal in February, speaking of the technology—or 20 years, according to Nvidia’s Jensen Huang.

Or maybe it will never arrive. Today’s quantum computing investors are essentially backing competing physics experiments in a derby to create Feynman’s machine. While they’ve made progress, no clear winner has emerged. That’s despite claims of moves into practical use and demonstrations of small quantum computers outperforming ordinary ones in special cases.

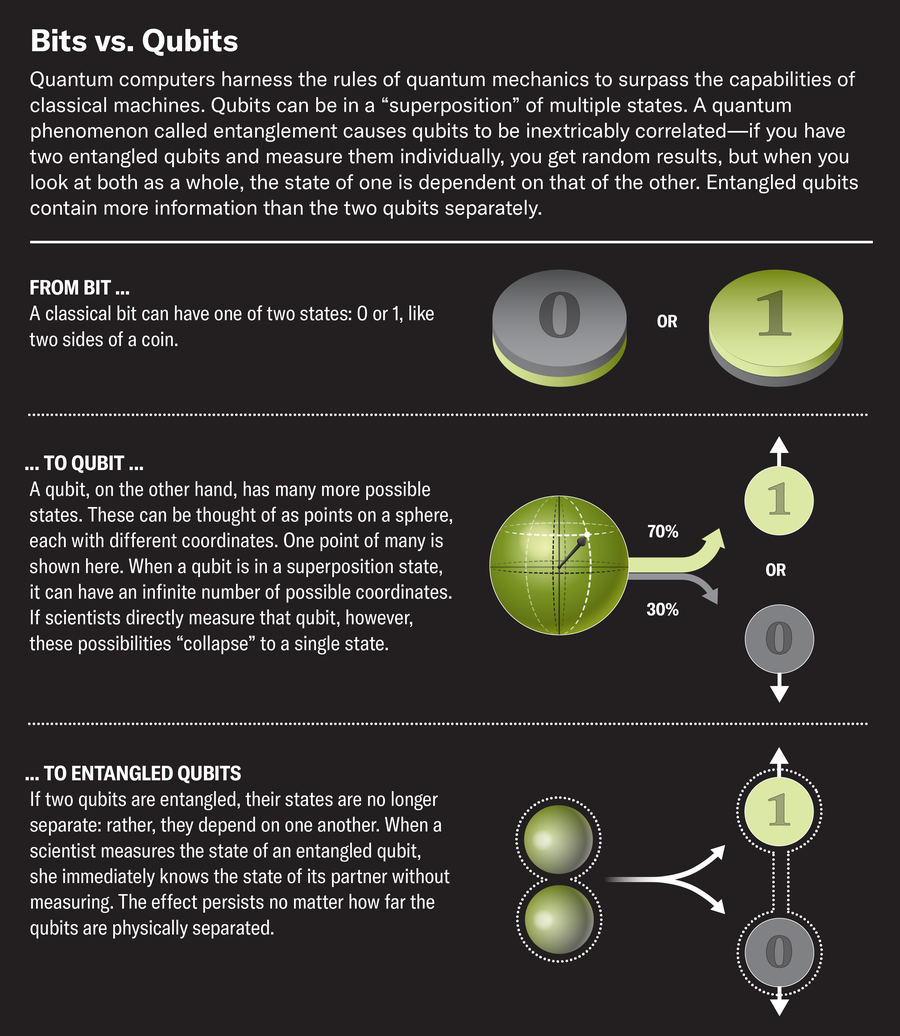

The fundamental problem remains, however, that making robust “qubits” at the heart of a quantum computer—as opposed to the simpler “bits” processed by your laptop one—is hard-to-do physics.

Today’s computers just manipulate bits, which are fixed at either a 0 or 1 in value. They perform calculations in assembly-line fashion. Qubits instead have values simultaneously suspended in superposition between 0 and 1, like Schrödinger’s poor cat. Their value is any inclusive number of possibilities between 0 and 1 in a qubit. Instead of an assembly line of bits, an array of linked, or “entangled,” qubits effectively solve problems in exponentially fast leaps by holding all possible values simultaneously as they make their calculations.

The trouble has been finding the right qubit because they are very fragile while the calculations are performed on them, which can lead to disqualifying error rates. That has led to years of incremental advances. The latest turn comes in Microsoft’s February announcement of a “topological” quantum computer, touted in a news release, and accompanied by an experimental paper in Nature. (Nature and Scientific American are both part of Springer Nature.) Basically, the makers of Windows are betting they can create a bleeding-edge quantum physics effect inside superconducting aluminum wires. That effect is the induction of a still theoretical Majorana particle that behaves magnetically as both an electron and anti-electron (as in antimatter) in those wires. That magnetic state of the “quasiparticle” inside the wire is the qubit.

Theoretically it would be a more stable qubit because interference would have to scramble both ends of the wire simultaneously to destroy any information they encoded. Microsoft said in the news release: “The Majorana 1 processor offers a clear path to fit a million qubits on a single chip that can fit in the palm of one’s hand,” referring to the threshold of qubits for a useful quantum computer.

That sounds great, except Nature’s editors, critics soon noted, included a peer review note with the Microsoft study that disavowed some of the news release’s claims. In particular, they noted the paper had not definitely shown “Majorana zero modes” in the computer. Translated, that means they want to see more proof. (Microsoft claimed it had created these quasiparticles in 2018, only to have to retract the claim, doubtless adding to the scrutiny.)

Google likewise unveiled a “state-of-the-art quantum chip” for computing last December, and it used “transmon” qubits, first proposed in 2007. These rely on oscillating currents traveling inside 150-micrometers-wide superconducting capacitors. This Willow chip holds 105 qubits. Only 999,895 to go. Meta’s Mark Zuckerberg soon cast doubt on the technology, depressing quantum computing stocks.

And finally, to end the month of February, Amazon announced an “Ocelot” quantum computing chip with nine qubits, along with its own Nature paper. That one manipulates a superconducting resonator to serve as a qubit, within which error-tolerant “cat” qubits (named in honor of Schrödinger’s kitten, natch) are controlled by photons, or light particles. The error-correcting capabilities of cat qubits were only first demonstrated in 2020.

Other quantum computing approaches would suspend single ions, for example, turning a single cadmium ion into a qubit, on a circuit board—or would use the photons inside laser pulses as the qubits read out by photodetectors.

These are all big bets on engineering 21st-century physics into machinery, with notable progress coming in the past decade, making it possible to see the quantum glass as half-full instead of half-empty. There are many way ways to skin the quantum computing cat, it turns out.

Yet no certainty exists that any of these models will work in the end. That raises fears of the field becoming, like nuclear fusion, another physically possible but Sisyphean technology, always 20 years away.

The myriad approaches to creating a quantum computer, all still provisional on whether they will scale up to the million-qubit realm, reinforce that the field is still in its adolescence. News-release-driven announcements of new chips, with accompanying Wall Street hype, threaten a new kind of tech bubble just as the AI one is fading, which may explain a lot of the attention on the technology.

I write this as a science reporter whose first quantum computing story covered a proposal in the journal Science in 1997 to cook up a quantum computer in a coffee cup relying on a nuclear magnetic resonance (NMR) spectrometer (an idea still pursued, as of last year.) “There’s lots of physics between here and a working quantum computer,” IBM’s David DiVincenzo told me then. He wasn’t kidding.

This is an opinion and analysis article, and the views expressed by the author or authors are not necessarily those of Scientific American.